I had the desire to setup a website the other day. This lead me to looking into static blogs again which lead to me to hugo.

Here is the short guide to how I set it all up. I wanted to keep the version

of the hugo runtime checked into git. I know from past experience that go

modules have the capability to do this, if you use go run from within the

directory. This seemed like a good starting point.

# Setup the repo

mkdir keyneston.com

cd keyneston.com

git init

go mod init

# Install hugo:

go get --tags extended github.com/gohugoio/hugo

# Initialise hugo, with force because git and other files already exist in the

# current directory:

go run github.com/gohugoio/hugo new site . --force

Now I just needed to make it make it a bit easier to run hugo commands. For

this I created a file called ./scripts/hugo.

./scripts/hugo

#!/bin/sh

go run github.com/gohugoio/hugo $@

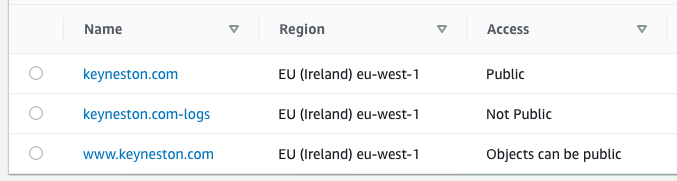

Next up we need to make it deployable. This meant creating an s3 bucket (or three).

- First we have the

keyneston.combucket. This is where the website is uploaded. This is configured as a static website via the s3 console. - Next there is the

keyneston.com-logsbucket. The first bucket is configured to have access logs go into this. - Finally there is the

www.keyneston.combucket. This one is configured as a static server with the redirect option configured to send to the main site.

It is a good idea to put a CDN in front of an S3 bucket that is serving static content. I being very cheap went with cloudflare. In order for cloudflare to be able to access the bucket we had to set an ACL. I shamelessly stole one that I found on the internet.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::keyneston.com/*",

"Condition": {

"IpAddress": {

"aws:SourceIp": [

"2400:cb00::/32",

"2405:8100::/32",

"2405:b500::/32",

"2606:4700::/32",

"2803:f800::/32",

"2c0f:f248::/32",

"2a06:98c0::/29",

"103.21.244.0/22",

"103.22.200.0/22",

"103.31.4.0/22",

"104.16.0.0/12",

"108.162.192.0/18",

"131.0.72.0/22",

"141.101.64.0/18",

"162.158.0.0/15",

"172.64.0.0/13",

"173.245.48.0/20",

"188.114.96.0/20",

"190.93.240.0/20",

"197.234.240.0/22",

"198.41.128.0/17"

]

}

}

}

]

}

Then I had to configure hugo to deploy to the s3 bucket. This took a trivially small amount of Toml.

[[deployment.targets]]

# An arbitrary name for this target.

name = "aws"

# S3; see https://gocloud.dev/howto/blob/#s3

URL = "s3://keyneston.com/?region=eu-west-1"

Testing by running ./scripts/hugo deploy showed this all working. Using the

cloudflare panel I setup my DNS and away I went. Testing showed everything

working.

To simplify things I wrote a small makefile with the common commands.

Makefile

.PHONY: run

run:

./scripts/hugo server

.PHONY: git-submodule

git-submodule:

git submodule update --init --recursive

.PHONY: build

build:

./scripts/hugo

.PHONY: deploy

deploy:

./scripts/hugo deploy

I had one last goal for all of this. Setting up github actions so that it

would auto deploy on pushes to master. This required creating an AWS IAM

policy and user to do the deploys. The policy is below. It is critical

that when listing your resource you include both

"arn:aws:s3:::keyneston.com" and "arn:aws:s3:::keyneston.com/*". The /*

on the second one is what allows the policy to work for items in the S3 bucket

and not just the bucket itself. Can’t tell you how many times myself and

coworkers have been bit by that.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:GetAccessPoint",

"s3:PutAccountPublicAccessBlock",

"s3:GetAccountPublicAccessBlock",

"s3:ListAllMyBuckets",

"s3:ListAccessPoints",

"s3:ListJobs",

"s3:CreateJob",

"s3:HeadBucket"

],

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::keyneston.com",

"arn:aws:s3:::keyneston.com/*"

]

}

]

}

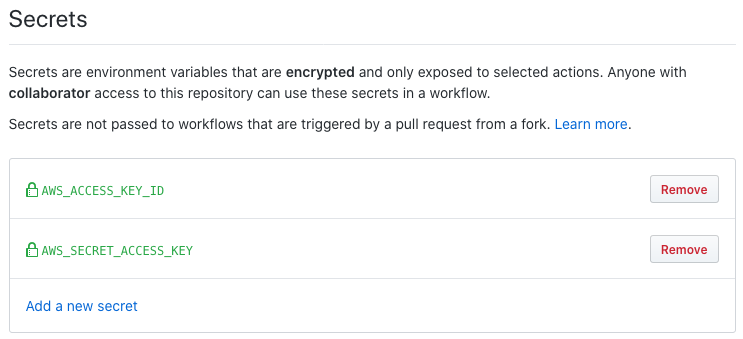

Once I created the policy I setup two secrets for the github repository.

Finally the github configuration to pull it all together:

.github/workflows/go.yml

name: Go

on:

push:

branches: [ master ]

pull_request:

branches: [ master ]

jobs:

build:

name: Build and Deploy

runs-on: ubuntu-latest

steps:

- name: Set up Go 1.13

uses: actions/setup-go@v1

with:

go-version: 1.13

id: go

- name: Check out code into the Go module directory

uses: actions/checkout@v2

- name: Get dependencies

run: |

go get -v -t -d ./...

if [ -f Gopkg.toml ]; then

curl https://raw.githubusercontent.com/golang/dep/master/install.sh | sh

dep ensure

fi

- name: Build

run: make git-submodule && make build

- name: "Configure AWS Credentials"

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: eu-west-1

- name: Deploy

run: make deploy

Then it was just a matter of testing and making sure it all worked together.

I would like to play around with doing the more ops/config side of this via

terraform. It seems to have a Cloudflare provider, and I am already familiar

with its AWS S3 provider. Normally I start with terraform but for whatever

reason I didn’t on this project. Oh well maybe soon.